How to Find Data Adequacy Ahead of CECL

FASB’s guidance for estimating expected credit losses is not prescriptive, so, examiners are not asking exactly how you plan to calculate your reserve under CECL today. However, due to the shift from an incurred to expected loss model, banks need to be working on loan-level data collection now as a first step toward being compliant under future GAAP.

In this recent webinar, Garver Moore and Tim McPeak – principal consultants with Sageworks advisory services group – cover how community banks can improve data quality, assure data adequacy and take the step towards data validation and modeling. The session takes listeners through data collection methods, suggested fields for community banks to consider by methodology type and the advantages and disadvantages of starting CECL preparations today.

The keys to preparing today, as illustrated and outlined below, are understanding the methods used for data collection methods, determining the adequacy of the data collected and filling gaps when identified.

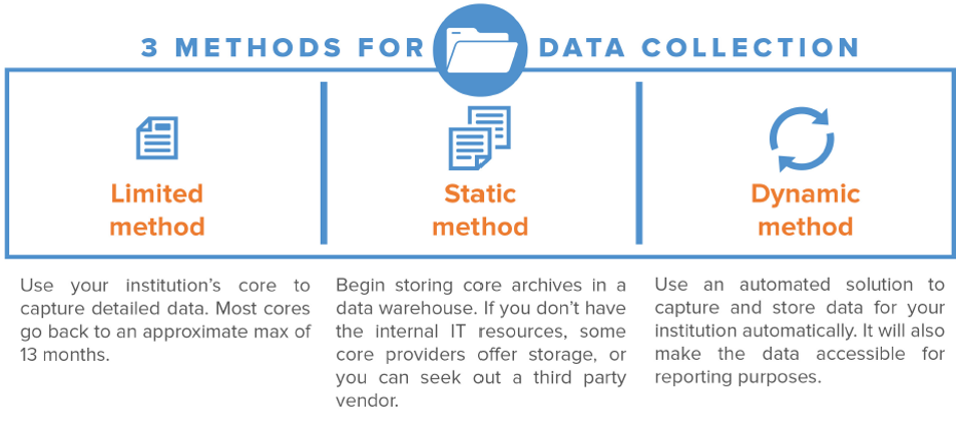

Data Collection Methods

- Limited method: Not a viable approach for most core systems due to limited data storage.

- Static method: Preserves optionality later in the project. Consider consistency and coherency.

- Dynamic method: Significantly reduced risk and offers most optionality for use.

Data Adequacy Checklist

- The data is labeled appropriately (headers consistently applied and are understandable)

- Data does not contain duplicates (fields, rows or entities)

- There are no inconsistencies in values (e.g., truncated by 000’s vs. not truncated)

- Data is stored in the right format (e.g., numbers stored as numbers, zip codes stored as text)

- The file extracted from the core system is stored as the right file type

- File creation is automated; not requiring manual file creation

- Data is reliable and standardized throughout the institution, across all departments

- Data fields are standardized and governed to ensure consistency going forward

- Data storage does not have an archiving time limit (e.g., 13 months)

- Data is accessible (usable format like exportable Excel files, integrates with other solutions)

- Archiving function captures data points required to perform range of robust methodologies

Filling data gaps

If you would like to learn more, watch the on-demand CECL – Understanding Data Webinar.